Evaluation Metrics in Recommendation System

Accuracy and Error Based Metrics

Mean Absolute Error (MAE)

Given a movie rating dataset[2], it calculates the average absolute difference between predicted and actual ratings.

1 | def mean_absolute_error(actual_ratings, predicted_ratings): |

Root Mean Square Error (RMSE)

Mean Square Error (MSE) calculates the average squared differences between predicted and actual ratings, which helps to negate the negative sign but it scales up the errors that cannot be compared to actual rating values due to different rating scales.

In RMSE, it takes the square root of MSE to normalize the scale issue that MSE had.

1 | import math |

Precision, Precision@K

It measures the proportion of relevant recommendations out of all the recommended items, the formula[5] is:

Precision@K[4] is outfitted with a top-K bound, in the scenario that users are not willing to exhaustively browse all the recommended items and only have a short bandwidth for the top-K items; in this case, the recommendation list is hard capped to top-K items only.

1 | def precision(recommended_items, relevant_items): |

Recall, Recall@K

It measures the proportion of relevant recommendations out of all the relevant items, the formula[5] is:

1 | def recall(recommended_items, relevant_items): |

Precision-Recall Curve [3]

ROC Curve [3]

Ranking Metrics: Quality Over Quantity

Accuracy-based metrics allow us to understand the overall performance of the results we get from the recommendation systems. But they provide no information on how the items were ordered. A model can have a good RMSE score but if the top three items that it recommends are not relevant to the user then the recommendation is not much useful. Ranking metrics assess the quality of the ranking of recommended items, ensuring that the most relevant items appear at the top of the list.

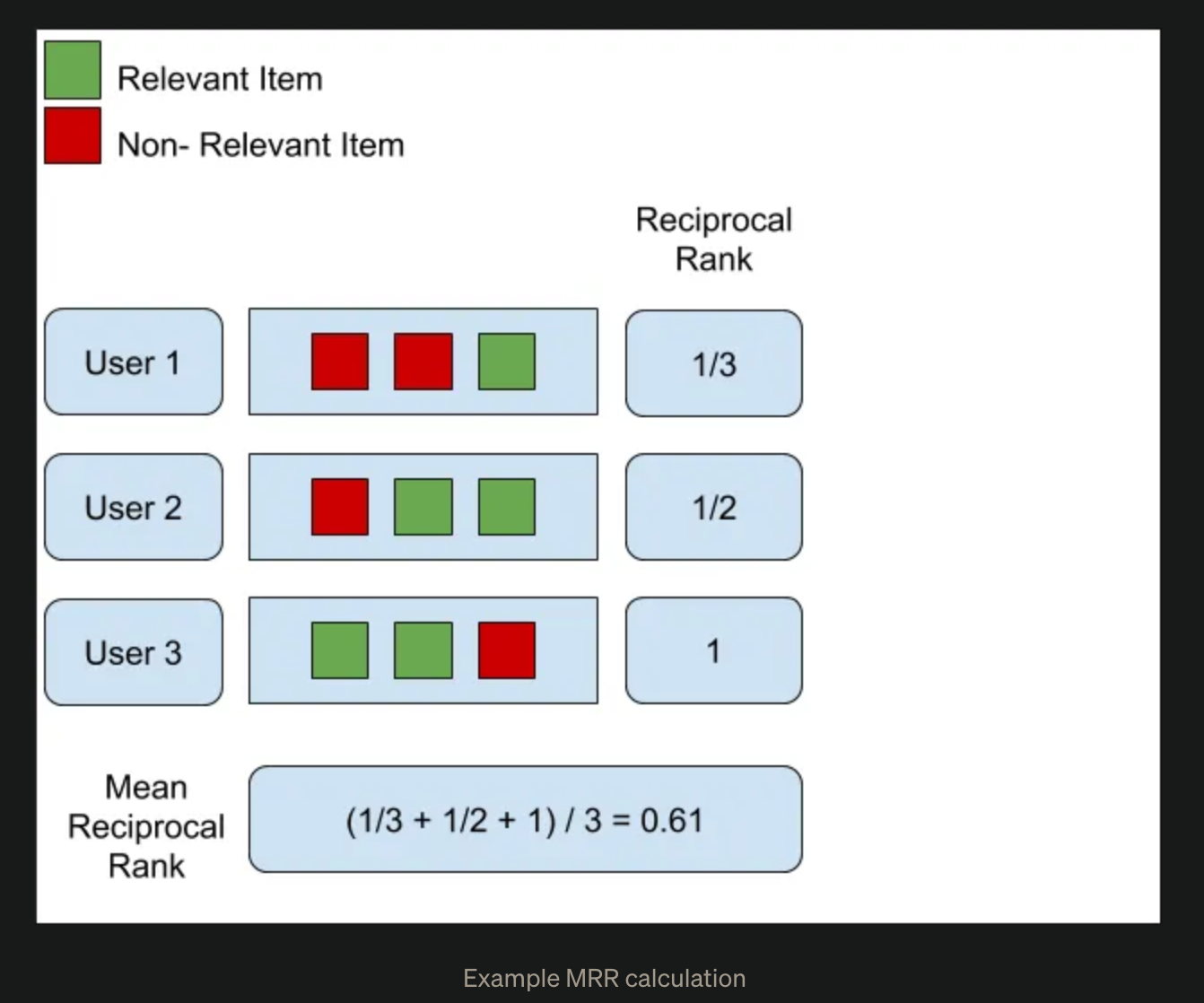

Mean Reciprocal Rank (MRR)

It calculates the average of the reciprocal ranks of the first relevant recommendation for each user.

- Pros

- Position-aware: MRR takes into account the position of the first relevant item in the recommendation list, rewarding systems that rank relevant items higher. For example, MRR of a list with the first relevant item at its 3rd position will be greater than for a list with the first relevant item at 4th position.

- Average performance: MRR calculates the mean reciprocal rank across all users, providing an overall measure of the recommendation system’s performance.

- Intuitive interpretation: MRR scores range from 0 to 1, with higher values indicating better performance.

- Cons

- Limited scope: MRR focuses exclusively on the first relevant item in the list and does not evaluate the rest of the list of recommended items. This can be a limitation in scenarios where multiple relevant items or the overall ranking quality are important.

- Binary relevance assumption: MRR assumes a binary relevance scale (either relevant or not relevant) and does not account for varying degrees of relevance on a continuous scale. This can be a limitation in situations where the relevance of items is not binary and needs to be quantified more granularly.

- Lack of personalization: While MRR provides an average performance measure across all users, it may not fully capture the personalized aspect of recommendation systems. A high MRR score does not necessarily guarantee that the recommendation system is providing good recommendations for each individual user.

- Sensitivity to outliers: MRR can be sensitive to outliers, as it calculates the reciprocal rank of the first relevant item for each user. A few users with very low reciprocal ranks can significantly impact the overall MRR score, potentially making it less reliable for evaluating the general performance of a recommendation system.

1 | def mean_reciprocal_rank(recommended_items_list, relevant_items_list): |

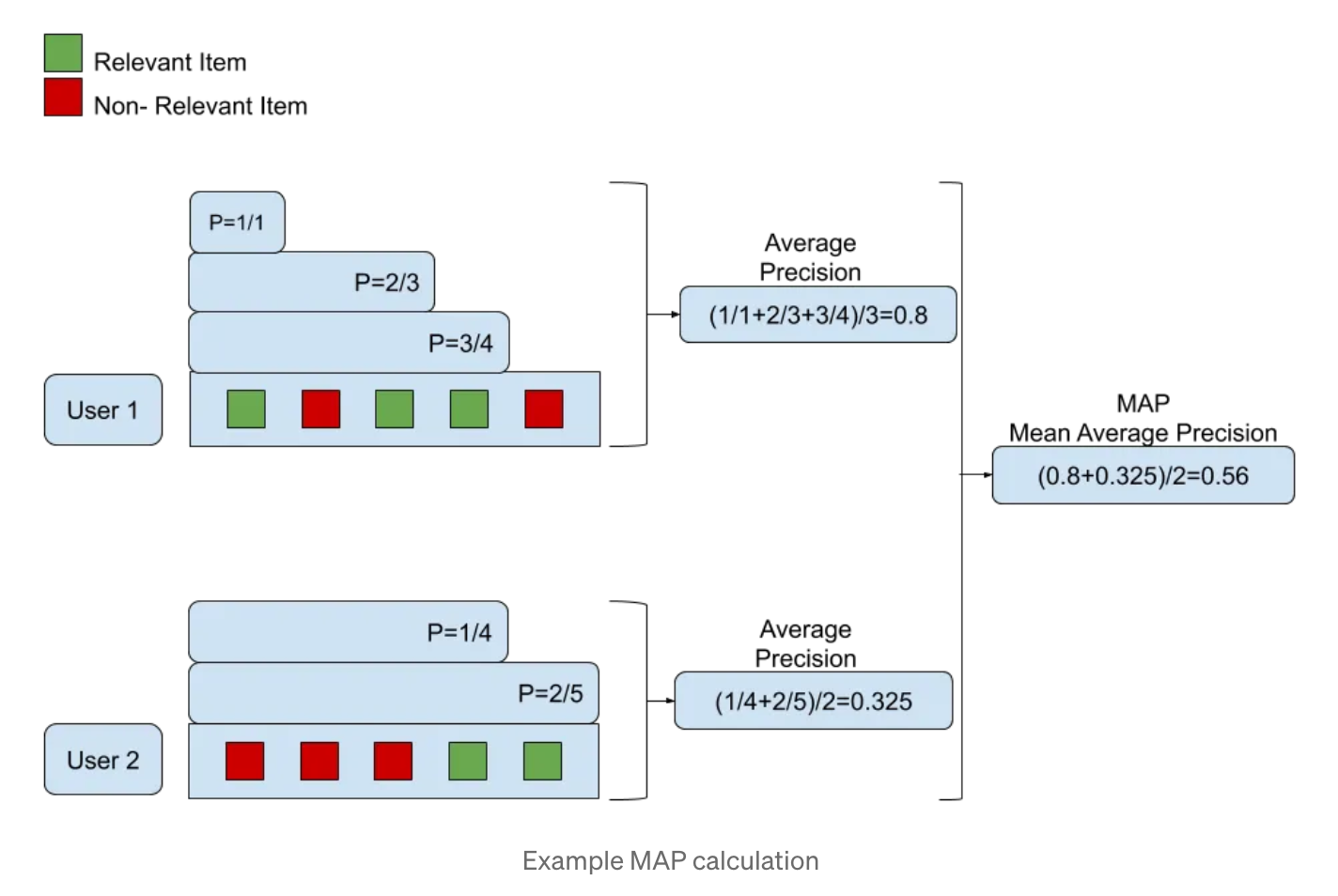

Mean Average Precision (MAP)

Similar to MRR, it calculates the average precision for each user and takes the mean across all users. It takes into account both the order and the relevance of recommended items. The larger value is, the better the recommendation quality is.

- Pros

- Position-aware: MAP takes into account the position of relevant items in the recommendation list, rewarding systems that rank relevant items higher.

- Relevance-aware: MAP considers the relevance of items by calculating the average precision for each user, which is the average of the precision scores at each relevant item’s position.

- Average performance: MAP calculates the mean average precision across all users, providing an overall measure of the recommendation system’s performance.

- Robustness: MAP is less sensitive to outliers compared to some other metrics, as it calculates the average precision across multiple positions in the recommendation list for each user.

- Cons

- Binary relevance assumption, e.g., on a scale from 1 to 5 stars, the evaluation would need first to threshold the ratings to make binary relevancies. One option is to consider only ratings bigger than 4 as relevant. This introduces bias in the evaluation metric because of the manual threshold[4].

- Lack of personalization

- Not suitable for all scenarios: MAP is more appropriate for recommendation scenarios where a ranked list of items is provided to users. It may not be suitable for other types of recommendation scenarios, such as collaborative filtering or content-based recommendations that do not involve explicit ranking.

1 | def average_precision(recommended_items, relevant_items): |

Normalized Discounted Cumulative Gain (nDCG)

Given the fact that most relevant items are more useful than irrelevant items, nDCG evaluates the ranking quality by assigning higher importance to relevant items appearing at the top of the recommendation list. It is normalized to ensure comparability across different users and queries.

Cumulative Gains (CG): It sums the items based on its relevancy, hence, the term cumulative. For example, if we score the relevance, most relevant score => 2, somewhat relevant score => 1, least relevant score => 0.

Discounted Cumulative Gains (DCG): It penalized the items that appear lower in the list. A relevant item appearing at the end of the list is a result of a bad recommender system and hence that item should be discounted. To do so we divide the relevance score of items with the log of its rank on the list.

Normalized Discounted Cumulative Gains (nDCG): nDCG normalized the DCG values of the different number of the items lists. To do so we sort the item list by relevancy and calculate the DCG for that list. This will be the perfect DCG score as items are sorted by their relevancy score. We divide all DCG score of all the list we get by this perfect DCG to get the normalized score for that list.

Pros

- Position-aware: Items that are ranked higher (closer to the top) contribute more to the nDCG score, reflecting the fact that users are more likely to interact with items at the top of the list.

- Relevance-weighted: nDCG incorporates the relevance of each recommended item, allowing it to differentiate between items with varying degrees of relevance. This makes it suitable for situations where the relevance of items is not binary (e.g., partially relevant, highly relevant) and can be quantified on a continuous scale.

- Normalized: nDCG is normalized against the ideal ranking, which means it can be compared across different queries or users.

- Suitable for diverse recommendation scenarios: nDCG is applicable to various recommendation scenarios, including search engine result ranking, collaborative filtering, and content-based recommendation.

- Intuitive interpretation: nDCG scores range from 0 to 1, with higher values indicating better ranking quality.

Cons

- Lack of personalization

- Binary relevance assumption: Although nDCG can handle varying degrees of relevance, it is often used with binary relevance judgments in practice.

- Sensitive to the choice of the ideal ranking: The normalization factor in nDCG is based on the ideal ranking, which can sometimes be subjective or difficult to determine. The choice of the ideal ranking can influence the nDCG score, potentially affecting its consistency and reliability.

1 | import math |

Spearman Rank Correlation Evaluation [2] [12]

Pearson Correlation [12] [14]

Rank-Biased Precision (RBP) [15]

Expected Reciprocal Rank (ERR) [15]

Coverage and Diversity Metrics

Catalog Coverage

It measures the proportion of items in the catalog that are recommended at least once.

1 | def catalog_coverage(recommended_items_list, catalog_items): |

Prediction Coverage

It measures the proportion of possible user-item pairs for which the recommendation system can make predictions.

1 | def prediction_coverage(predicted_ratings, total_users, total_items): |

Diversity

It helps ensure personalized and engaging experiences for users, supports exploration, reduces filter bubbles, promotes long-tail items, and enhances the robustness of the system.

1 | import numpy as np |

Serendipity

It measures the degree to which the recommended items are both relevant and unexpected, promoting the discovery of novel and interesting items. A recommendation list with serendipity can introduce users to items they may not have expected to enjoy or find relevant, leading to serendipitous discoveries. This can create a more enjoyable and engaging user experience.

Measuring serendipity is a challenging task as it involves the combination of relevance, surprise, and novelty. Here’s a Python function to calculate a basic version of Serendipity, which measures the degree to which the recommended items are both relevant and unexpected:

1 | def serendipity(recommended_items_list, relevant_items_list, popular_items, k=10): |

Popularity[2]

Novelty[2]

Hit Ratio (HR)[5]

It is the fraction of users for which the relevant item is included in the recommendation list of length K.

|UKhit| is the number of users for which the relevant item is included in the top K recommended items ||Uall| | is the total number of users in the test dataset.

Reference

- 1.The Ultimate Guide to Evaluating Your Recommendation System ↩

- 2.An Exhaustive List of Methods to Evaluate Recommender Systems ↩

- 3.Why You Should Stop Using the ROC Curve ↩

- 4.MRR vs MAP vs NDCG: Ranking-Aware Evaluation Metrics And When to Use Them ↩

- 5.Ranking Evaluation Metrics for Recommender Systems ↩

- 6.Evaluating A Real-Life Recommender System, Error-Based and Ranking-Based ↩

- 7.Paper Evaluating Collaborative Filtering Recommender Systems ↩

- 8.Paper A Survey of Accuracy Evaluation Metrics of Recommendation Tools ↩

- 9.Understanding NDCG as a Metric for your Recommendation System ↩

- 10.A Complete Tutorial On Off-Policy Evaluation for Recommendation Systems ↩

- 11.Popular Evaluation Metrics in Recommender Systems Explained ↩

- 12.Metrics of Recommender Systems: An Overview ↩

- 14.A Book Recommendation System ↩

- 15.Comprehensive Guide to Ranking Evaluation Metrics ↩