Interest Graph

An interest graph is an important knowledge asset that can connect various objects and users on social platforms. For example, Pinterest[2] utilizes an interest graph to build relationships between Pins, Boards, and Pinners to power various downstream applications, like recommendations. Similarly, Twitter[4] leverages an interest graph to suggest relevant tweets to users based on their connections and engagement with topics. It’s an interesting notion to create an interest graph and use it in various ML applications, therefore I’ve compiled some collections and personal opinions for further investigation.

Knowledge-based Interest Graph

Constructing a robust knowledge graph requires aggregating both internal and external knowledge. Pinterest’s early interest graph development[1] provides a helpful example. Key steps includes:

- Extracting n-gram phrases from user content like pins, boards, descriptions to get an initial set of interest candidates. Used grammars to filter low quality terms.

- Comparing extracted terms against external sources like search queries and Wikipedia to validate relevancy and proper nouns.

- Applying an internal blacklist to filter abusive, explicit, and platform-specific stop words.

- Leveraging third-party manual human curation and crowdsourcing to evaluate term quality and validate standalone concept.

Pinterest’s interest graph synthesizes a subset of commonly encountered interests into a hierarchical taxonomy. This multi-level structure currently contains tens of thousands of interests organized by similarity and user activity patterns[2]. For instance, among the 10 levels of granularity, the top layer has 24 high-level interest categories like “women’s fashion” and “DIY crafts”. Moreover, the graph’s structure and vocabulary continuously expand over time through ongoing curation.

Several key metrics characterize an interest graph’s scalability:

- Precision - Accuracy of modeled interest connections.

- Coverage - Number of interests mapped per object or item.

- Multilingual - Support for representing interests in multiple languages.

- Space Efficiency - Optimizing storage needs as the graph grows.

In addition to Pinterest’s methodology, there are other valuable approaches for constructing interest graphs. External knowledge bases like Wikipedia provide expansive domain coverage to incorporate into tailored graphs. Twitter’s SimCluster[4] paper exemplifies implicit graph building, analyzing tweet engagement signals to model interests and connections.

Major social platforms facilitate users sharing diverse content types that can be mapped to interest graphs, including:

- Facebook - Text, image, and video posts with comments shared publicly or with friends.

- Pinterest - Public image Pins with descriptions.

- YouTube - User uploaded videos with descriptions and comments.

- Twitter - Short text tweets encouraging active discussions.

- TikTok - User created short form videos.

While interest graphs may differ across platforms, heterogeneous user-generated content can be mapped to interest graphs by aligning attributes to relevant graph nodes and edges.

Latent Interest Graph

Beyond knowledge graphs, Twitter’s SimCluster[4] demonstrates constructing latent interest domains by analyzing user-user following graphs. This approach could be adapted to build an interest graph on Pinterest.

In the paper, a fundamental building block is to compute an undirected, weighted similarity graph between influncing users based on the shared followers, and modeling the influencer follower overlap distills an implicit interest graph for exploration. The key steps are listed below:

- A bipartite following graph is constructed with followers on the left and followees on the right

- The similarity graph nodes are influencer followees from the right partition

- The weight between two followees is determined based on the cosine similarity of their followers on the left partition.

- Edges with similarity scores below a certain threshold are discarded, and a certain number of neighbors with the highest similarity scores are kept for each user.

In Pinterest, a similar bipartite graph can be constructed with users on the left and Boards on the right partition. Boards are collections of multiple Pins organized by users around topics, and there are almost two orders of magnitude fewer Board sets than the Pins overall.

- A bipartite follower graph is constructed with users on the left and Boards on the right

- The similarity graph nodes are Board nodes from the right bipartite partition.

- The weight between two Boards is determined based on the cosine similarity of associated user engagement vectors on the left partition.

- Note that various engagement signals can be tuned to represent similarity, e.g., the explicit engagments(like, repin, saving, commenting, hide, etc) or a mix of explicit and implicit signals(click, view, etc)

- Edges with similarity scores below a certain threshold are discarded, and top-k most similar Board neighbors are kept instead.

- Higher edge scores indicate more similar connected Boards.

After tuning, the Board similarity graph can be extracted from the right partition. Then community detection like Louvain[8] can cluster Boards into latent “interest” communities. Each interest community comprises several similar Boards as determined by their user engagement overlap. This can be represented as <board_id, interest_id, score> triplets, where the score is the affinity between the Board and interest that quantifies Board-interest relevance based on shared user engagement.

X2Interest Mapping

Pinterest[3] leverages their interest graph to map various subjects and power diverse machine learning applications:

- Pin to Interest (P2I) - mapping of Pins to interests

- User to Interest (U2I) — mapping of users to interests

- Query to Interest (Q2I) — mapping of search queries to interests

- Home feed ranking — recommendations on your home feed

- Search ranking & retrieval — recommendations from search results

- Ads interest targeting & retrieval — product for showing promoted content

Content-based Pin2Interest

For Pin2Interest, in early stages, the interest extraction relied heavily on Pin text processing:

- Extract various text sources associated with a Pin, including:

- Title, Description, Link text, Board name, Link alt text, Image caption, Page title, Page meta title, Page meta description, Page meta keywords, etc.

- Normalize text fragments via tokenization and lemmatization

- Interests are extraced by lemmatizing the text and then matching it against a dictionary. For example, “car”, “cars”, “car’s” and “cars’” all lemmatize to “car”.

- A set of <interest_id, source, frequency> triplets is generated after the processing.

A supervised logistic regression model is chosen for its simplicity and result quality. Specifically, top-N (N=25) relevant interests are predicted per pin using human-labeled judgment data. For a given image, a human judge may say that “San Francisco” is relevant to the Pin, “island” would be judged irrelevant. Precision@k, recall@k and coverage metrics evaluated model accuracy. Diverse text-based and counting features were incorporated, including:

- Word embedding (cohesive)

- Frequency counts from Pin, board and link texts (popularity)

- Normalized TF-IDF scores (uniqueness)

- Category affinities–do interests belong to the same or similar categories (cohesive)

- Position within text (importance)

- Whitelisting (wikipedia titles, vertical glossaries/taxonomies, entity dictionaries)

- Graph queries (cohesive)

- Pluralization (normalization)

- Blacklisting

- Head queries (importance)

In production, the interest graph covered 23 out of 32 languages, and there are ~8 interests are extracted per-language per Pin on average.

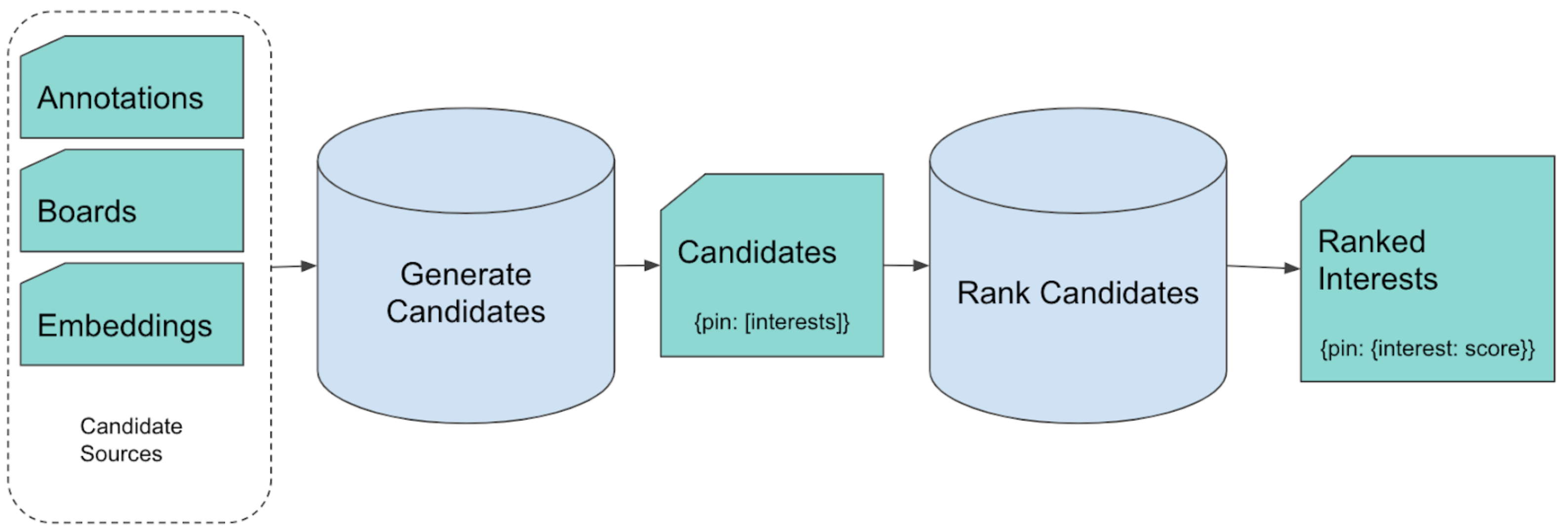

In a later improvement (2019), Pinterest enhanced their Pin2Interest mapping[3] system using a two-stage approach:

- Candidate generation,

- Generates up to 200 (70 on average) interest candidates per Pin.

- Uses lexical xxpansion techniques, like matching terms with a low edit distance, reordering of annotations, lemmatization, etc.

- Leverages Pin/Board co-occurance to aggregate related terms for highly precise lexical matches.

- The result is a set of <pin_id, interest_id, score> tripplets.

- Ranking,

- Uses GBDT model to predict whether an interest is relevant to a Pin or not. Multifaceted features include:

- Embedding similarity features (text embeddings such as FastText, Pin embeddings such as PinSage[5])

- Tf-idf features from Pin annotations[6] (where Pin is the document, and interest is the term)

- Taxonomy features (taking advantage of hierarchy, such as Level 1 parent match)

- Pin engagement features (gender, popularity, etc.)

- Context features (type of Pin, country, etc.)

- Uses GBDT model to predict whether an interest is relevant to a Pin or not. Multifaceted features include:

Given the prominence of multi-modal LLMs in 2023, it is worthwhile to investigate LLM finetuning[12] to model Pin-interest relevance. At a high level, it could leverage a pretrained model like CLIP[11] which jointly embeds text and images. The model input would consist of vaiious dense feature vectors and sparse features, including:

- Pre-trained text embeddings of the Pin title, description, image caption, Board name, etc

- Pre-trained image embeddings of the Pin’s associated photo

- Pin metadata like author, location, etc

With a high quality human labeled dataset, the model is trained to predict relevance scores for a <pin_id, interest_id> pair. It should be emphasized that it is an exceptional extreme classification[13] problem which involves tens of thousands fine granular interests as labels, therefore it require more practical thoughts throughout the training procedure.

User2Interest

Once an interest graph has been constructed and content objects like Pins mapped onto it, a robust interest-based user representation can be modeled by incorporating several behavioral signals. One main benefit is that both object and user representations are now mapped to the same interest domain, which can power various downstream applications, e.g., the personalized recommendation application. A basic approach to derive user representations in the interest space is as follows:

- Extract the user’s recent engagement activities with Pins over a fixed duration (e.g. past 30 days), including actions like saving pins, repinning, share pins, etc. Focusing on explicit user-initiated interactions helps reduce noise.

- Aggregate the extracted explicit or implicit engagements over Pins

- Map the engaged Pins onto the interest graph’s nodes using the existing object mapping system (e.g. Pin2Interest). Tally the total engagement frequency for each interest node.

- Normalize the per-interest engagement counts to derive scores between 0-1 reflecting relative user affinity. This accounts for variability in total engagement volumes.

- The final output is a set of <user_id, interest_id, score> triplets that encode the user’s affinity with each interest based on their Pin engagement history.

Engagement-based Pin2Interest

Previous Pin2Interest mapping relied primarily on semantic understanding of text, images, etc, which could be of great help when Pin is fresh and has received few user interactions(cold start scenario). One limitation then is the static representation that doesn’t capture critical User-Pin interactions in the online media platform.

Inspired by SimCluster, an engagement-based Pin2Interest could incorporate User2Interest and user signals to reflect the Pin-interest popularity in a real-time manner:

- Extract Pin engagement activities over a short term

- Aggregate engaged users’ User2Interest representations. For example, it applies an decayed aggregation function[9], such as averaging, to the User2Interest representations of the engaged users.

- Update item representations continuously based on real-time user-item engagements. It is crucial to be able to efficiently learn representations of new items before they lose their relevance, given the dynamic items may rapidly rise and diminish in popularity.

- The final output is a set of <pin_id, interest_id, score> triplets that quantifies Pin-interest affinity via user engagement strength

It would be fascinating to examine whether these engagement-based Pin2Interest signals can increase the user activities in different ML applications, e.g., trending Pin recommendations and Ads user targeting, etc.

Reference

- 1.Building the Interests Platform ↩

- 2.Taste Graph part 1: Assigning Interests to Pins ↩

- 3.Pin2Interest: A scalable system for content classification ↩

- 4.SimClusters: Community-Based Representations for Heterogeneous Recommendations at Twitter ↩

- 5.PinSage: A new graph convolutional neural network for web-scale recommender systems ↩

- 6.Understanding Pins through keyword extraction ↩

- 7.ClusterFit: Improving Generalization of Visual Representations ↩

- 8.Fast Unfolding of Communities in Large Networks ↩

- 9.Counters in Recommendations: From Exponential Decay to Position Debiasing ↩

- 10.PinnerSage: Multi-Modal User Embedding Framework for Recommendations at Pinterest ↩

- 11.Learning Transferable Visual Models From Natural Language Supervision ↩

- 12.ClipCap: CLIP Prefix for Image Captioning ↩

- 13.Extreme Classification ↩

- 14.A Survey on Extreme Multi-label Learning ↩